Troubleshooting Raid Manager (RM6)

Following on from my original RM6 Cheat Sheet, I have put together this article in providing some troubleshooting tips that I have come across over the past few years working alongside the arrays supported by this raid manager software.

Use healthchk to determine any recognisable faults.

# /usr/lib/osa/bin/healthchk -a

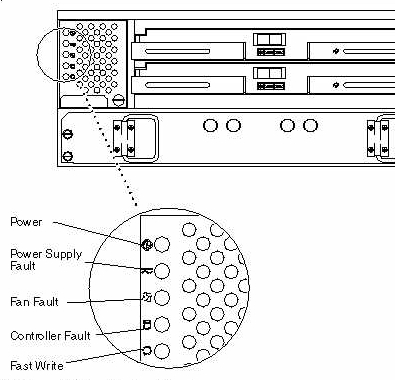

Check the led status on the RDAC unit.

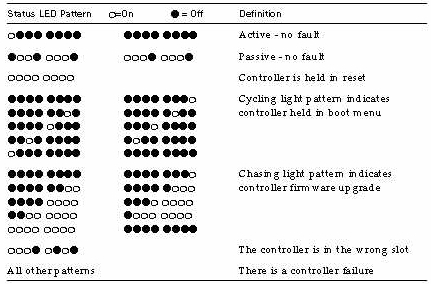

This is also available in the maintenance and tuning view within the RM6 gui. The following image provides a Status LED interpretation

The recovery view will also give the option for manual/automatic recovery of on array fault

RM6 patches

Consult SunSolve enotify #20029 for the latest patches. It is the official patch/compatibility matrix and publicly available.

Common Issues

Some common issues and troubleshooting tips

- Running

probe-scsi-allat the OBP will show controllers and luns attached -- WILL NOT SHOW ALL DISKS IN TRAYS - make sure the

/etc/osa/mnffile does not have any (dots) .'s in it. For example:- mbc_001 = good

- mbc.lab.001 = bad

- Use

/usr/lib/osa/bin/healthck -ato check for known problems. Use the recovery guru if any problems are found - Use

/usr/lib/osa/bin/ladto see what controllers are available and what luns are on them - The output from

/usr/sbin/formatwill show you what luns the OS sees

Additional Information

Firstly, try to get a module profile from the RM6 gui (click file->save module profile). If you do not have access to the GUI, you can get the output by running the following:

# storutil -c c#t#d#s# -d # raidutil -c c#t#d#s# -i # raidutil -c c#t#d#s# -B # drivutil -d c#t#d#s# # drivutil -i c#t#d#s# # drivutil -I c#t#d#s# # drivutil -l c#t#d#s# # drivutil -p lun c#t#d#s#

RM6 GUI shows nothing (or is incorrect)

Use the following procedure to resolve this issue:

- exit the gui

- remove lock files

# rm /etc/osa/lunlocks # rm /etc/osa/locks/*.lock

Unable to load driver after OS upgrade

This is most likely due to the fact that Solaris was upgraded from a 32 bit only version (2.6-) to a 32/64 version (2.7+). To resolve:

- remove the current packages

# pkgrm SUNWosafw SUNWosamn SUNWosar SUNWosau

- re-add the packages

# pkgadd -d <pkg-location> SUNWosafw SUNWosamn SUNWosar SUNWosau

Controller held in reset

Firstly try and resolve via RM6 software

# /usr/lib/osa/bin/rdacutil -u <module name> # /usr/lib/osa/bin/rdacutil -U <module name>

If the above fails, then perform a hardware recovery

- Power down the controllers.

- Dislodge the controller showing good led status.

- Power up the reset controller.

This should bring the reset controller to normal mode.

Firmware will not upgrade due to i/o

This is most likely due to volume manage having control of the luns.

- stop all volumes attached to those luns,

- deport the diskgroup the luns are in.

- Retry the firmware upgrade.

Note: If the luns are in rootdg. You will need to turn VxVM off completely. This may involve unencapsulation procedures.

Firmware upgrade fails using the gui

This is most likely the controller is now a door stop. However try using the fwutil command. If this fails, the controller is a door stop.

# fwutil /usr/lib/osa/fw/xxxxxx.bwd c#t#d#s# # fwutil /usr/lib/osa/fw/xxxxxx.apd c#t#d#s#

Two modules have the same name

You may get a name change warning and there are two modules with the same name. To resolve:

- Use the

config guiorraidutil -ito locate the empty module (the empty one will most often be the original) - Delete the

emptymodule. The newly named module will take it's place. (this is normal )

Lad and format don't match

- Syncing up lad and format outputs pre 6.22 and or Solaris 7

# cd /dev/dsk # rm c?t?d* (all the c#t#'s that are associated with the LUN) # cd /dev/rdsk # rm c?t?d* (all the c#t#'s that are associated with the LUN) # cd /dev # rm -r osa # cd /devices/pseudo/ # rm -r rdnexus@* # reboot -- -r

Check the devices and if eitherladorformatare still missing devices issue anotherreboot -- -rto do another reconfiguration boot. - Syncing up lad and format outputs in 6.22 and or Solaris 7

PROCEDURE TO FOLLOW ONCE I CONFIRM IT IS ROCK SOLID TO RELEASE

Problem getting 16 lun support

If you have problems getting 16 lun support to work on PCI based systems with A1000,RMS2000 arrays review SunSolve SRDB #21234

This is usually a issue with an incorrect entry in the /dev/glm.conf file. Use the following to enable 16 un support pn PCI (E250/E450) sysetms with A1000/RMS200 arrays:

device-type-scsi-options-list =

"Symbios StorEDGE A1000", "lsi-scsi-options",

"Symbios StorEDGE A3000", "lsi-scsi-options",

"SYMBIOS RSM Array 2000", "lsi-scsi-options";

lsi-scsi-options = 0x107f8;

For Clarion arrays see SunSolve InfoDoc #20966

Adding 16/32 lun support

Pre 6.22 (RM6.1 and above)

- Kernel patch 105181-xx (latest) is installed

- ISP Patch 105600-xx (latest patch provides 32 lun support)

- Patch 105356-xx (Solaris 2.6 sd patch)

- Procedure:

- Edit the

/usr/lib/osa/rmparamsfile and adjust the following variable to 16 => System_MaxLunsPerController - Go to the

/usr/lib/osa/bindirectory and run the following script# cd /usr/lib/osa/bin # ./add16lun.sh

- reconfig reboot

- Edit the

16 and 32 LUN support for 6.22

If you are running 6.22 you can either download these scripts from SunSolve or find them on the 6.22 Raid Manager cdrom under the Tools directory. Maximum LUN support has been increased to 32 when running 6.22 on some systems.

Foles included in the tar ball:

- README

- add16lun.sh

- add32lun.sh

Additional troubleshooting scenarios

Review chapter 4 of the A3500/A3500fc controller module guide (Doc ID 805-4980-11)